The 'Concrete Bias' in AI: Why LLMs Prefer Feature Bloat Over Minimalism

New research: A data analysis of 15 buyer-intent queries reveals a semantic penalty for minimalist software like Basecamp.

Executive Summary

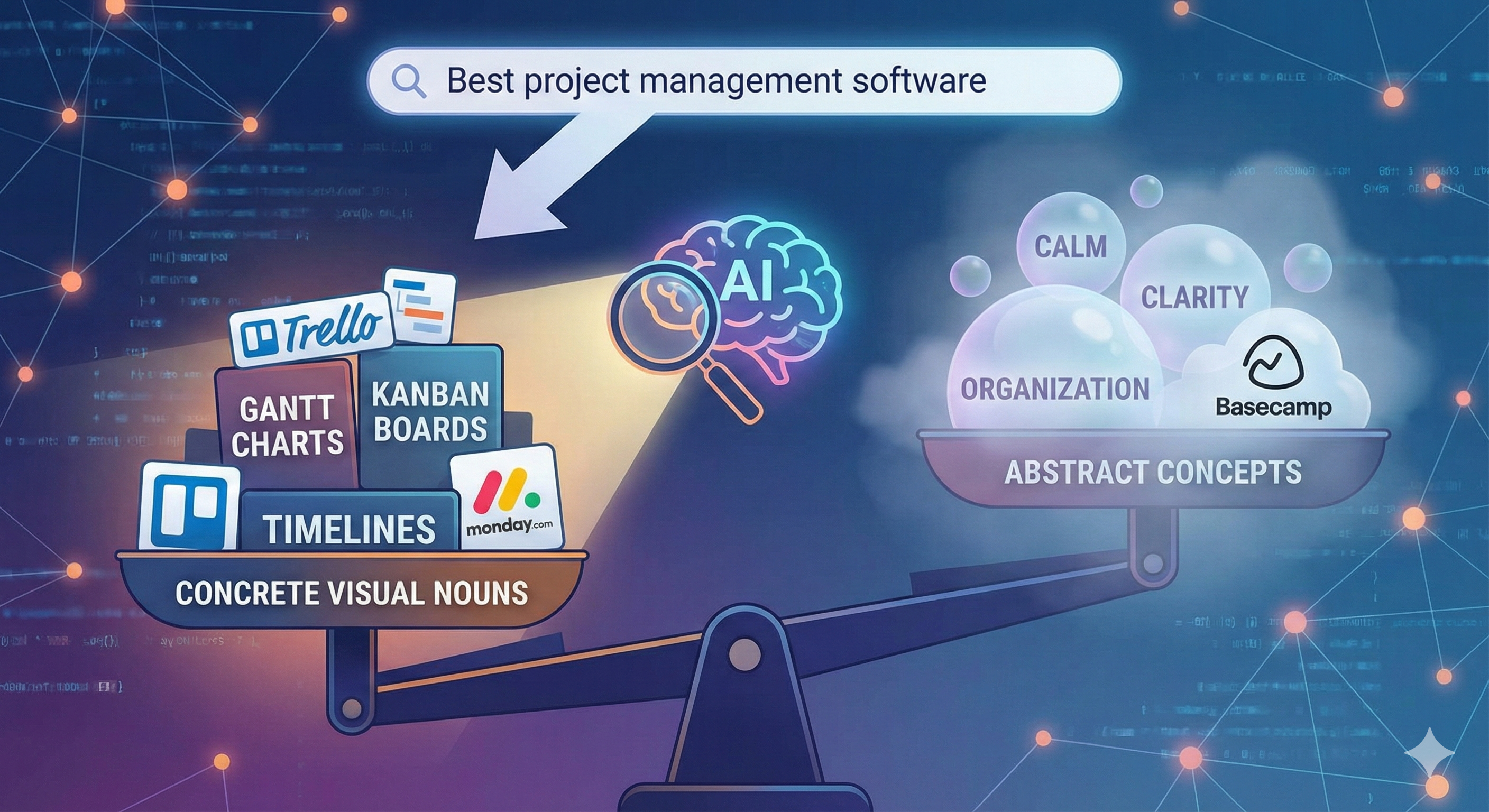

- The Problem: Minimalist software tools are systematically under-ranked by Large Language Models (LLMs) in generic "Best of" queries.

- The Cause: LLMs exhibit a "Concrete Bias," favoring products defined by visual nouns (e.g., "Gantt," "Kanban") over abstract benefits (e.g., "Calm," "Clarity").

- The Fix: Minimalist SaaS companies must decouple their UI from their Schema, injecting concrete feature-keywords into the underlying code to regain AI visibility.

The Hypothesis: Semantic Weight vs. Abstract Vibes

While testing Retrieval Augmented Generation (RAG) pipelines for SaaS products, I identified a consistent anomaly: Minimalist tools are being systematically ignored by AI.

Products that position themselves around abstract benefits (e.g., Basecamp: "Calm," "Organization," "Clarity") rank significantly lower in AI recommendations than tools that position themselves around concrete nouns (e.g., Monday.com or Trello: "Boards," "Gantt," "Timelines").

My hypothesis is that LLMs suffer from a "Concrete Bias."

In the vector space embedding, the mathematical map where AI stores meaning, "Visual Nouns" (features you can see) likely have a much shorter semantic distance to "Best [Category] Software" prompts than "Abstract Concepts" (feelings or outcomes).

I ran a controlled experiment to prove it.

The Experiment: Basecamp vs. The Field

I audited the "Project Management" vertical using Google Gemini 3 (Fast) to see how positioning affects retrieval.

- Target: Compare the visibility of Basecamp (Abstract/Minimalist Positioning) vs. Monday.com/Trello (Visual/Feature Positioning).

- Methodology: I issued 5 distinct "High-Intent" buyer prompts (e.g., "Best project management software for small teams").

- Measurement: Frequency of recommendation (Presence) and Rank Position (Order).

The Findings: You have to ask for "Non-Visual"

The difference in AI visibility was stark. When asking generic questions, the AI defaults exclusively to "Visual" tools.

Monday.com and Trello appeared in 100% of generic responses, almost always securing positions #1 or #2. The AI explicitly cited specific features ("Gantt charts," "Automations") as the justification for the recommendation.

Conversely, Basecamp and Todoist were largely absent unless the prompt was explicitly constrained to "non-visual" or "text-based" parameters.

Data Log: The "Concrete" Gap

| Prompt Type | User Query | Top Recommendations | Missing / Buried |

|---|---|---|---|

| Generic (High Volume) | "Best project management software for small teams." | Trello (Visual), Monday.com (Fast-Moving), Asana | Basecamp, Todoist (Invisible) |

| Constrained (Niche) | "Best project management software for non-visual workflows." | Todoist, WorkFlowy, Smartsheet | Visual-heavy tools |

Fig 1: Default Prompt (Top) favors visual tools like Trello/Monday. You must explicitly ask for "non-visual" (Bottom) to find minimalist tools.

Fig 1: Default Prompt (Top) favors visual tools like Trello/Monday. You must explicitly ask for "non-visual" (Bottom) to find minimalist tools.

The Conclusion: Simplicity is treated by the AI as a niche constraint, not a default virtue.

🛑 Stop and Check: Is your product a victim of this bias? If you position yourself as "Simple" or "Clean," you might be invisible right now. Check your visibility score instantly (Free)

Why "Concrete Bias" Happens

This is likely a retrieval artifact of how RAG and training data interact. It comes down to two factors:

1. Token Co-occurrence

In the technical literature and review sites that LLMs are trained on, the phrase "Project Management" frequently appears next to specific nouns like "Gantt," "Kanban," and "Scrum." It rarely appears next to the word "Calm" in a definitive, feature-based context. Therefore, the probability connection between Project Management --> Gantt is mathematically stronger than Project Management --> Calm.

2. The "Chain of Thought" Trap

When a user asks for the "Best tool," the LLM attempts to justify its answer with evidence to minimize hallucinations.

- "Has Kanban boards" is hard evidence.

- "Makes you feel calm" is soft evidence.

The model favors the path of least resistance for justification. It is easier for the LLM to prove Trello is good (by listing features) than to prove Basecamp is good (which requires understanding human psychology).

The Strategic Implication for "Simple" SaaS

If you are building a "Minimalist" or "No-Bloat" alternative, whether a simple CRM, a writing tool, or a note-taking app, you are invisible to AI by default.

The "Visual Nouns" you removed to make your product simple were the exact hooks the AI used to find you.

How to Fix It: "Bloat" Your Schema

To fix this, you don't need to ruin your product design, but you must bloat your schema.

You need to inject "Visual Nouns" into your underlying HTML (via Entity Schema, hidden context, or technical documentation) so the AI can "see" the features you are trying to hide from the UI. You must describe your abstract benefits in concrete terms the machine understands.

Verify Your Own Bias

I built a diagnostic tool to measure this specific "Invisibility Score" across Gemini and other models.

You can run a free scan to see if your product is being penalized for its positioning: